I decided on another method for recreating bird song. I am making this post to round up and share the first way of thinking about synthetic chirping. I do think superimposed oscillators are strangely attractive, yet there are more efficient methods for this project. The layering oscillators are interesting in a highly abstract way. I feel I made some reverse Fourier transform analyser, inspired by wasteful genetic methods.

Below you find the code I developed in python and pure data. The python code generates sequences, pure data converts them into sounds. The genetic algorithm tries to optimize parameters in the sound generation.

I’ve coded the project so there are three possible ways to define song fitness (inspired by ideas about song learning in Passeriformes):

– interactive (user-defined fitness)

– spectrogram comparison to a model using SSIM and ORB

– an object detection model trained on my canaries vocabulary (included in the download)

The code should be used by first generating a random population using the command “GenerateRandomPopulation(generationnumber, amount, complexity)” (where generation number should be 1, amount tells how many entities are in the generation and complexity defines how many oscillators the setup uses. I found out that 100 entities with a complexity of around 8 work well. It should be noted that the algorithm can add or remove oscillators through the process. Once an initial population is generated, you can choose on how to evolve the compositions:

INTERACTIVE MODE

--> InteractiveEvolutionLoop(generation,evolutions,amount)

Prompts user for selecting fittest compositions

Object detection

--> EvolutionLoop(generation,evolutions)

auto-evolves using object detection model

SSIM / ORB

--> SSIMEvolutionLoop(generation,evolutions)

auto-evolves using SSIM/ORB relative to modelInteractive mode

The following files are a selection of results of 20 generations of interactive selection. The interactive mode is by far the most fun and interesting way to use this software in my opinion. I wonder what kind of weird stuff one could breed. The most fascinating is the ability to stay kneading the compositions.

SSIM & ORB

The worst part about this method is it totally disregards the audio component and uses only image data from the spectrogram. The audio to spectrogram translation is very lossy, which makes this method iffy. The nice part is it runs fully automated and isn’t too harsh on the CPU. It is teleological and strives to be an exact copy of the model file, which is kind of uninteresting.

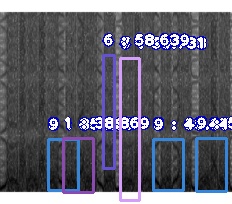

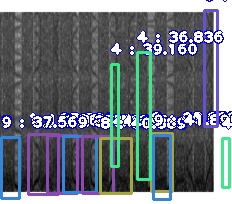

Object detection

And finally, the most computational intense way of automated breeding. I started by annotating a dataset of song spectrograms. Using that dataset I trained an object recognition model (starting from the well-known YOLO model). Here, once again, the teleologic aspect bothers me.

Conclusion

All in all, I feel I made a tidal wave calculating machine on a higher frequency, where the formation of virtual gears is powered by genetic algorithms. I think the automated ways of generating songs are quite boring and result in uninteresting audio, they regurgitate the model song or vocabulary but in a non-surprising way. They often result in a noisy high-amplitude grey matter.

As far as I’m concerned, the most interesting way to use the software is the interactive method (by far). It doesn’t fail to surprise me and the way of finding interesting sounds by chance is just fun (if you accept the occasional ear rape). It seems to be the most straightforward way to diversity, contrast and chaotic melodic non-repetitive sound. With the same mutation constants as the other methods, it uses the least amount of generations to get to interesting compositions. If one would want to try with some data juggling, this method could be used with the western 12-tone system to make more recognizable things resembling ‘music’. The way in which spectrograms are generated reminds me of the thrill of slowly seeing a meaningful image show itself in analogue photographic development.